AMD’s AI Inference Stacks Explained

Audience: engineers, data scientists, and product teams planning AI deployment on AMD platforms.

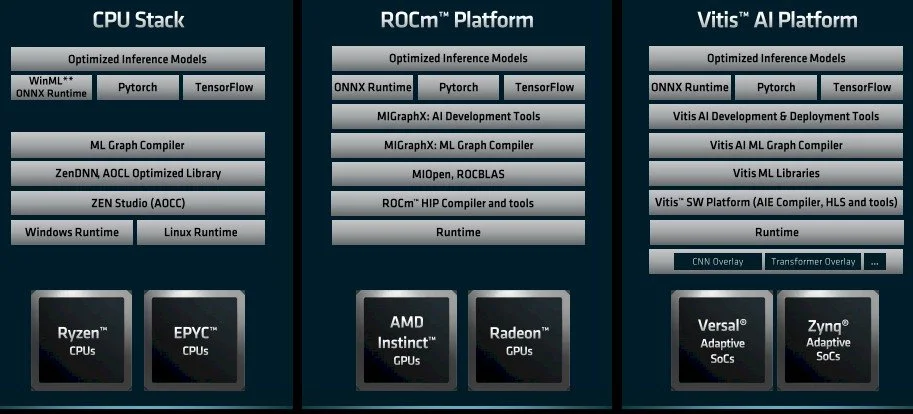

Goal: demystify the three AMD software stacks pictured—CPU Stack, ROCm™ Platform (GPU), and Vitis™ AI Platform (Adaptive SoCs)—so you can choose the right path for your model and ship with confidence.

The image shows a consistent top-down software story across AMD hardware:

Frameworks & runtimes you already use (PyTorch, TensorFlow, ONNX Runtime)

Graph compilers & libraries that optimize models for the target (CPU, GPU, or adaptive SoC)

Toolchains & runtimes that execute efficiently on the hardware

Silicon choices—Ryzen™/EPYC™ CPUs, AMD Instinct™/Radeon™ GPUs, and Versal™/Zynq® adaptive SoCs

Same frameworks at the top, different acceleration layers underneath. That means you don’t have to restart your ML stack when you change targets—just pick the right optimizer/graph compiler and runtime for the device. AMD is showing that no matter what hardware you choose—a CPU, a GPU, or a specialized Adaptive SoC—they have a dedicated but interconnected software stack to support you.

Notice the top layer, "Optimized Inference Models," is the same for all three. Frameworks like PyTorch, TensorFlow, and ONNX Runtime are the common languages of AI development. AMD's core message is: "Develop your model in the framework you already know, and we will provide the tools to run it efficiently on the best hardware for the job."

Stack 1: The CPU Stack (for Ryzen™ and EPYC™ CPUs)

This stack is for running AI workloads directly on a Central Processing Unit (CPU).

Target Hardware:

Ryzen™ CPUs: Consumer-grade processors for desktops and laptops.

EPYC™ CPUs: Server-grade processors for data centers.

When you would use this:

When you don't have a dedicated GPU or AI accelerator.

For AI inference tasks that are not massively parallel and can run efficiently on a few powerful CPU cores.

In cloud environments where you are renting CPU-only virtual machines.

Breaking Down the Layers (from the bottom up):

Hardware (Ryzen, EPYC): The physical processors.

Runtime (Windows, Linux): The operating system that the software runs on.

ZEN Studio (AOCC) & ZenDNN, AOCL Optimized Library: This is the core of the CPU stack.

AOCC (AMD Optimizing C/C++ Compiler): A specialized compiler that fine-tunes code to run as fast as possible on the specific architecture of Zen-based CPUs (Ryzen and EPYC).

ZenDNN (Zen Deep Neural Network Library): This is the "cuDNN for CPUs." It's a library containing highly optimized mathematical functions (like matrix multiplication) that are the building blocks of AI models. When PyTorch needs to perform a calculation, it can call a function in ZenDNN to execute it extremely fast on the CPU.

ML Graph Compiler: An AI model isn't just one big calculation; it's a "graph" of many connected operations. A graph compiler is a smart tool that looks at this entire graph and optimizes it. It might fuse several operations into one, reorder them for better cache usage, and make other changes to increase speed and reduce memory use.

High-Level Runtimes (WinML, PyTorch, TensorFlow): This is the layer developers interact with. You can write your code in PyTorch, and all the layers below work together automatically to execute it on the CPU. WinML/ONNX Runtime is a common framework for deploying pre-trained models, especially on Windows.

Stack 2: The ROCm™ Platform (for AMD Instinct™ and Radeon™ GPUs)

This is AMD's primary stack for high-performance AI on Graphics Processing Units (GPUs). This is the direct competitor to NVIDIA's CUDA platform.

Target Hardware:

AMD Instinct™ GPUs: Data center and supercomputing GPUs, designed for massive AI training and inference (e.g., MI300X).

Radeon™ GPUs: Consumer gaming GPUs that are also very capable for running local LLMs (e.g., RX 7900 XTX).

When you would use this:

For training large AI models from scratch.

For high-speed inference on complex models where performance is critical.

This is the main path for anyone doing serious AI/ML development on AMD GPUs.

Breaking Down the Layers:

Hardware (Instinct, Radeon): The physical GPUs.

Runtime: The fundamental OS-level drivers and software that allow the system to communicate with the GPU.

ROCm™ HIP Compiler and tools: This is the heart of the platform.

ROCm: The foundational software platform that unlocks the GPU for general-purpose computing. It's the CUDA equivalent.

HIP (Heterogeneous-computing Interface for Portability): This is AMD's brilliant strategic tool. HIP is a C++ API that allows developers to write code that can be compiled to run on either AMD GPUs (via ROCm) or NVIDIA GPUs (via CUDA) with minimal changes. This directly attacks NVIDIA's software moat by making it easier for developers to port their existing CUDA applications.

MIOpen, ROCBLAS: These are the core math libraries, just like we discussed before.

MIOpen: The "cuDNN" equivalent for AMD GPUs, providing optimized deep learning primitives.

ROCBLAS: The "cuBLAS" equivalent for AMD GPUs, providing highly optimized linear algebra functions.

MIGraphX: AI Development Tools & ML Graph Compiler: This is the GPU-specific optimization layer. It takes a model from a framework like PyTorch and compiles it into an optimized format that can be executed with maximum efficiency on Instinct or Radeon GPUs.

High-Level Runtimes (ONNX, PyTorch, TensorFlow): Once again, showing that developers can use their preferred frameworks, and ROCm will handle the execution on the GPU.

Stack 3: The Vitis™ AI Platform (for Versal® and Zynq® Adaptive SoCs)

This is the most specialized stack, designed for deploying AI models on "edge" devices. This is not for training models but for running them in real-world applications.

Target Hardware:

Versal® & Zynq® Adaptive SoCs (System-on-a-Chip): These are not just CPUs or GPUs. They are highly flexible chips that combine CPU cores with "programmable logic" (FPGA fabric) and often dedicated AI Engines (AIE). You can think of them as hardware that can be reconfigured to be a perfect, specialized processor for a specific task.

When you would use this:

In a smart camera for real-time object detection.

In a 5G network card to optimize data flow using AI.

In a car's advanced driver-assistance system (ADAS).

Anywhere you need very high performance and low power consumption for a specific AI task.

Breaking Down the Layers:

Hardware (Versal, Zynq): The adaptive chips.

Hardware Overlays (CNN, Transformer): This is a unique and powerful concept. Because the hardware is programmable, AMD provides pre-built "overlays"—highly optimized hardware configurations for specific types of AI models, like Convolutional Neural Networks (CNNs, for vision) or Transformers (for language). This gives you the performance of custom hardware without having to design it from scratch.

Vitis™ SW Platform (AIE Compiler, HLS and tools): This is the low-level software for programming this unique hardware. HLS (High-Level Synthesis) allows developers to write in a higher-level language like C++ and have it compiled down into a hardware configuration.

Vitis ML Libraries & Vitis AI ML Graph Compiler: These are the specialized libraries and compilers that take a trained model and optimize it to run on the AI Engines and programmable logic of the Versal/Zynq chips.

High-Level Runtimes: The workflow is clear: a data scientist trains a model in PyTorch on a GPU (using the ROCm stack). They then hand it off to an embedded engineer who uses the Vitis AI tools to compile and deploy that same model onto a Zynq chip in a final product.

In conclusion, this image brilliantly illustrates AMD's comprehensive strategy: Use the CPU Stack for general-purpose AI, the ROCm Platform for high-performance training and development, and the Vitis AI Platform for deploying those trained models into efficient, specialized, real-world devices.